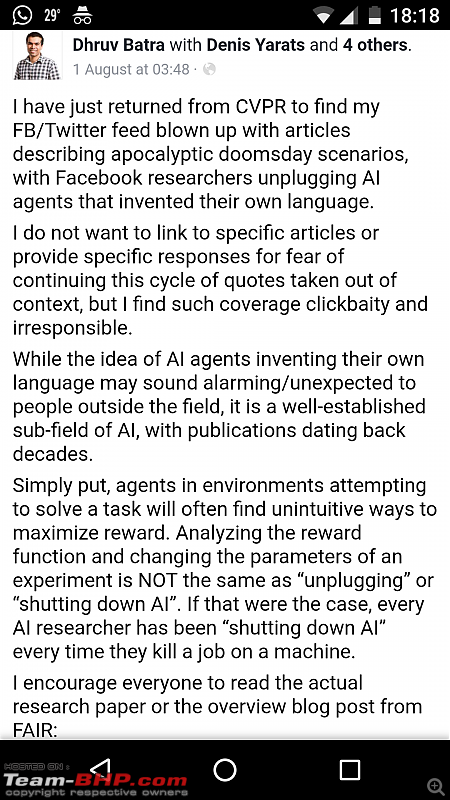

This news caused some sensation on FB last month. Let me repeat what I said there, and take it further.

This language developed by AI is a very utilitarian language, which is not superior to English or any human language. At the fundamental level, computers are really dumb. But they are extremely fast. A simple action by a computer requires hundreds of machine level instructions, if not thousands. That is because we have to instruct everything in a clear and non-vague terms to a computer.

So the AI developed a very plain language which conveys absolute meaning, unlike human language which is extremely confusing to a computer. Humans are capable of using the same word to mean many different things based on context or tone. Computers at the basic level cannot do it.

Ask anybody who has designed compilers (like me), converting a human understandable computer languages (C/Java/Python) to machine understandable language is very tedious. You will realize how much breakdown one has to do before the computer understands your intention.

A human may take few minutes to execute 2345 X 2Pi, while the computer might take a microsecond to do the same. But the human knows why he /she is multiplying, to get the circumference of the circle. But computer doesn't know this. That means human has intent, computer doesn't.

Recently a government official told my acquaintance, "Give me a pink one". Even though my acquaintance heard the expression for the first time, the intent was clear to him, it referred to ₹2000 note. Meanwhile the computer might find it easier to say "Give 1 rupee" 2000 times, and still be 1000 times faster than a human.

For example, this is what those AI bots did:

Quote:

|

In one exchange illustrated by the company, the two negotiating bots, named Bob and Alice, used their own language to complete their exchange. Bob started by saying "I can i i everything else," to which Alice responded "balls have zero to me to me to me…"

|

So what happened in FB research is trivial, it was just doing what it was programmed to do. But let's take it further.

AI sounds very intelligent to most practical purposes, hence the name artificial intelligence. This is the result of many layers of logic that instructs the computer to mimic human way of thinking. The final barrier is the intent. Does AI have intent? By itself, NO. But it can be programmed to do so. If you program the computer to generate intent, that is where Elon Musk's nightmare will start becoming true.

This is the fundamental difference between the thoughts of Mark Zuckerberg and Elon Musk. Who controls the intent?

The FB AI bots creating language to

improve the communication was the result of the programmed intent to

improve the communication. Since human language is too vague for computer use, they were programmed to improve it. This is what I think Zuckerberg feels, and therefore isn't afraid of AI.

However, what if the intent is not clearly programmed? Or what if an anarchist or psychopath programs malicious intent into the most powerful AI machine to takeover the world? Can humans out-think the AI to take the control back? This is what Elon Musk is talking about.

Let's go further. Think about evolution. It took humans 500+ millions of years to evolve from a single cell organism, because the evolution by natural selection is so slow. However, computers can do the similar evolution in matter of minutes. It took humans 400 years to go from thinking

sun goes around the earth to landing a man on the moon. But computers are at least million times faster in thinking. Once AI is mature enough to start evolving, it can evolve so fast, human brain will be incapable of instructing such an advanced computer what to do. This is what Elon Musk fears.

(2)

Thanks

(2)

Thanks

(6)

Thanks

(6)

Thanks

(1)

Thanks

(1)

Thanks

(1)

Thanks

(1)

Thanks